The Dawn of Software 3.0

Andrej Karpathy, founder of the term "vibe coder", recently gave a talk introducing the concept of Software 3.0. Now I've poked my fair share of fun at "vibe coding", but in fairness that has less to do with Karpathy and more to do with the life and meaning that term has taken on in the AI landscape. Software 3.0, though, is a major paradigm shift in how we approach software - not just in how we write it, but in how we interact with it as users.

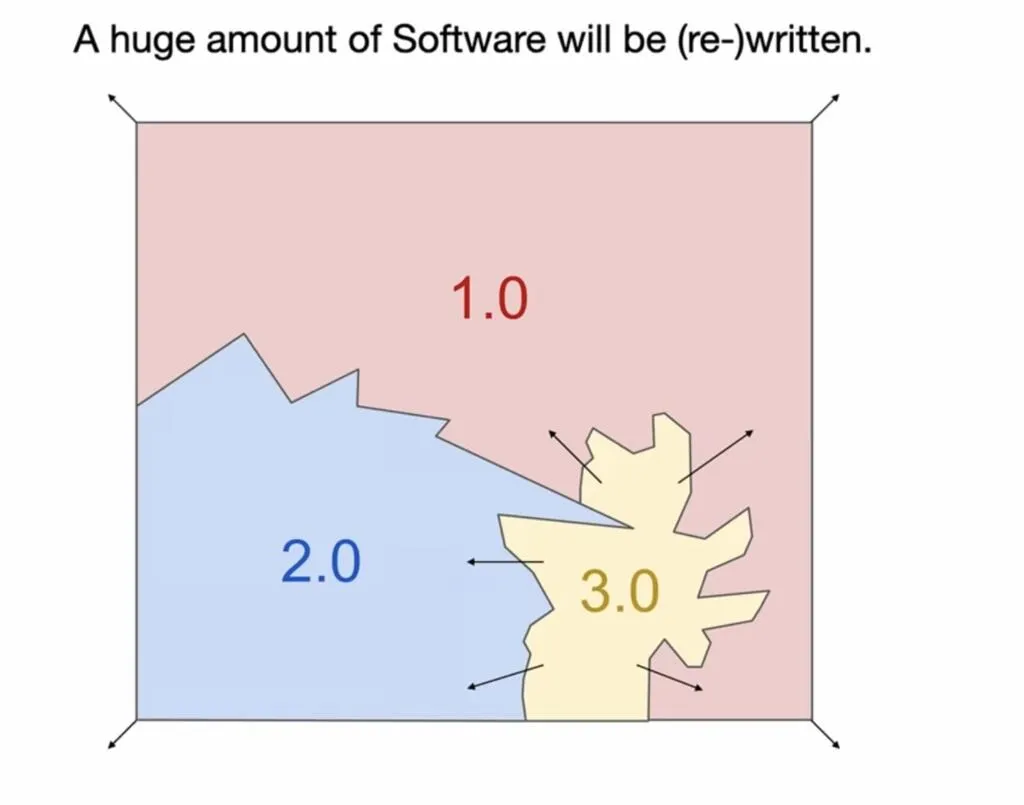

To frame this idea more clearly, software can essentially be broken down into three major iterations.

Software 1.0 - this is the software we most traditionally understand. It's static and deterministic in how it’s written and how it handles inputs and outputs. For example, a web app with a UI built using CSS and JavaScript is a classic example of 1.0 software. Users can press buttons, interact with form elements and so on. Everything that happens is the direct result of explicitly defined logic written by a developer. No surprises - and no flexibility.

Software 2.0 - the next step in this progression consists of machine learning systems like classifiers, the kind you’d train on ImageNet. Here, we aren’t writing rules line by line. Instead, we're feeding models vast amounts of data and letting optimisers infer internal parameters. This gave rise to neural networks as fixed-function tools: image in, label out. It revolutionised tasks like object detection and speech recognition. Importantly, Karpathy notes that during his time at Tesla, this kind of system quite literally started replacing the 1.0 stack. Old-school C++ logic was stripped away as neural networks took over perception, tracking, and sensor fusion - all tasks that previously required thousands of lines of carefully hand-crafted code.

Software 3.0 - this is where things change fundamentally. Instead of writing code or training a model, we prompt. Software 3.0 isn’t defined by LLMs alone, but by what they enable: a new, open-ended interface to software. Unlike the fixed, opaque systems of Software 2.0, these models are adaptable and introspectable. Prompting lets us steer them in real time, reusing a single system across many tasks without retraining. They’re becoming multimodal, able to interpret text, images, audio and more, and increasingly connected to tools that let them act, reason and integrate. Tasks that once needed handcrafted logic or narrow models can now be handled through a prompt.

Hostile takeover in progress.

We’ve entered a new paradigm, and it's already reshaping what it means to be a software engineer. Increasingly, our job is less about constructing deterministic systems, and more about orchestrating 3.0 systems - unblocking agents, managing context, designing prompts, and building flows that help these models operate effectively.

We’re already seeing this shift in action with agentic coding tools like Claude Code, but this transformation doesn’t just stop at developer tooling.

More significantly, Software 3.0 is beginning to rewrite how we interact with software altogether. Traditionally, we’ve interacted with software through GUIs - carefully designed layers that translate our intentions into a series of clicks, toggles, and workflows. A good UI tries to reduce the friction between what we want and how we get it. Even the best UI, however, is still a layer between you and your goal.

A recent example for me was booking a train ticket with Trainline (other train booking systems are available). I knew what I wanted: to be in London by a specific time. I didn’t care how I got there, which carrier I used, or what flow of screens I had to click through. The UI, however, had its own ideas - it applied an expired railcard to my booking. Not a disaster, but still a failure to reflect or respect my actual intent. I didn’t want to “use” Trainline. I wanted to be on the right train, at the right time, for the right price.

This is what Software 3.0 aims to solve. Instead of clicking through someone else’s interface, we’ll soon be able to express intent directly - through natural language, voice, or some new input we haven’t yet imagined. “I need to be in London by 10.30am", based on my needs it should be able to infer I want the cheapest possible ticket, and to use any valid railcards I have. That’s it. The software handles the rest. No dropdowns. No expired discounts. Just the outcome you wanted.

This shift also has major implications for designers. In the Software 1.0 world, UI and UX design were about bridging human intention and machine logic through carefully crafted interfaces - layouts, typography, spacing, hover states - all the elements that made software feel usable. In the Software 3.0 world, that interface is increasingly natural language or raw intent. Design becomes less about buttons and kerning, and more about shaping how intent is expressed, interpreted, and confirmed. The designer’s role therefore evolves into choreographing this interaction. It’s no longer just about making things look good - it’s about making systems legible to intention.

Of course, this model has limitations. LLMs - like all neural networks - are fallible. They hallucinate, they overreach, they forget. That's why interaction design becomes even more critical. The key is tightening the feedback loop. When the system can quickly show you what it thinks you meant, and you can easily verify or correct it, that’s when it becomes more than just an assistant. It starts to feel like a co-pilot.

As these systems mature though, more and more products will be designed not around the interface, but around the outcome. We’ll move from navigating software to simply stating what we want. The software - intelligent, flexible and increasingly autonomous - will handle the rest. With any luck, that means I’ll finally get the right train ticket.

This is the promise of Software 3.0 and I am here for it.