Unlock AI with MCP

The Model Context Protocol (MCP) represents a fundamental shift in how we think about AI interactions.

Traditionally LLMs have only been able to access context based on their historic training set or the limited context we can provide them (unless you're setting up a full RAG solution). MCP now enables AI tools to directly connect with external systems - such as APIs or databases for live data or controlling external systems.

In a sense, as HTTP was to the web, MCP is to LLMs. It defines a way for LLMs to understand the tools they have at their disposal by wrapping them in contextual information.

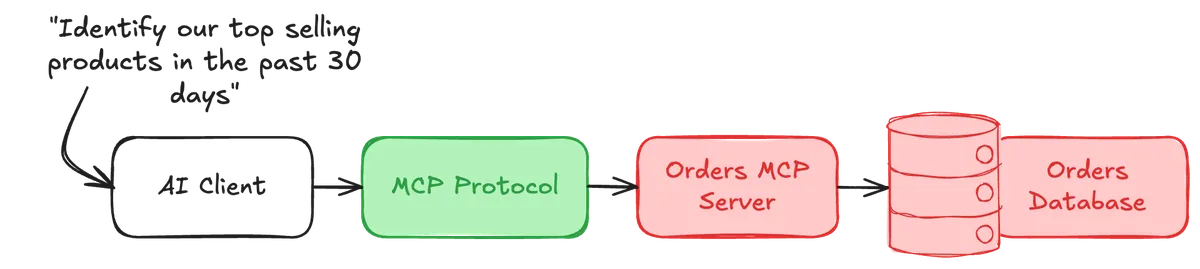

For example, an AI sales agent might have a flow setup like the one above - the AI client would connect to an MCP server which implements using something like the MCP SDK a tool for connecting and retrieving orders from the database.

The tool may also implement certain parameters, for example days, which would modify the query it sends to the DB. This context would then be fed back to the AI to complete its query.

server.tool(

"get-orders",

"Get orders made by customers from the database.",

{ days: z.number().description("Number of days into the past to retrieve orders for." },

async ({ days }) => {

// query sales using days parameter

return {

content: [{ type: "text", text: data }]

};

}

);

Importantly you will notice that the trigger for this tool is not explicitly stated. The tool is invoked contextually from the name, parameters and description that we give it. Which means the LLM can use it flexibly as part of its reasoning.

Plus, we're not limited to just tools, MCP servers can also implement resources and prompts. Resources are contextual snippets of information such as a DB URL, whilst prompts are as the name would suggest predefined prompts.

A resource for instance could be used to feed a tool for querying data, about the specific environment it was querying. The tool would then return the data and a prompt could then generate a report with this data in a consistent format.

Moving one level higher in the abstraction, you can then run multiple of these MCP servers together, allowing the LLM to invoke each tool as and when it needs to. Thanks to the inherent modular nature of MCPs - all handling specific tasks you can creatively assemble workflows that connect disparate system. For example, one MCP could fetch the data, another MCP could generate the report with that data and another for inputting the data into a CRM.

As you can see MCP servers offer a powerful and flexible way to extend the functionality of LLMs by enhancing the context and tools they have access to. The great part as well, the ecosystem is growing richer everyday - there are already thousands of MCP servers ready and available that you can integrate into your workflows.

I've compiled some links to a number of popular collections and MCP registries below: